Artificial Intelligence and machine learning help researchers take a giant step toward more accurate stock assessments.

Since the early 2000s, New England and Mid-Atlantic scallop fisheries have been managed sustainably through temporary area closures and periodic harvests by vessels limited to seven-person crews. This sound management depends on accurate numbers, and the New England Fishery Management Council (NEFMC) relies on data drawn from different sources for its Atlantic sea scallop stock assessments. “We get data from Coonamessett Farm Foundation (CFF), Woods Hole Oceanographic Institute, the Commercial Fisheries Research Foundation, and others says Teri Frady, communications chief at NOAA Fisheries. But Frady is particularly interested in new AI-augmented data coming from the work of Dvora Hart at the Northeast Fisheries Science Center—NEFSC—in Woods Hole, Massachusetts. “I’m always interested in what Dvora is doing,” says Frady.

Hart, the lead assessment scientist for Atlantic sea scallops at the NEFSC, counts scallops on Georges Bank and in the Mid-Atlantic, and up until recently, it has been a tedious business. “We use a trawler to tow a Habcam V4 about 2 meters off the bottom,” says Hart. “In a survey, we can collect 4 million images, and we annotate about 80,000 of them by hand.” Hart and her team have had to look at 80,000 images and count the scallops in each one—a process that can take four to eight weeks.

The data that the NEFSC team generates goes primarily to the New England Council and is used to make management decisions, including when and for how long to open closed areas. Hart notes that artificial intelligence and machine learning are helping researchers improve their game and compile more accurate data.

“We’ve partnered with a private AI company called Kitware,” says Hart. And we’re using their computer vision program, VIAME, to count scallops.” Hart explains that the system is based on what is known as convolutional neural networks (CNN), which are deep learning algorithms designed primarily for object recognition, image classification, detection, and segmentation. “They act something like the human brain,” says Hart. “And they have revolutionized what AI can do. We’re seeing a huge jump in the effectiveness of these computer programs.”

But computer vision system requires training, and Hart has to teach the program to see and count scallops. To do that she feeds it training data. “I set up 50 thousand to 60 thousand manually annotated images and give them to the CNN until it figures out how to see scallops.”

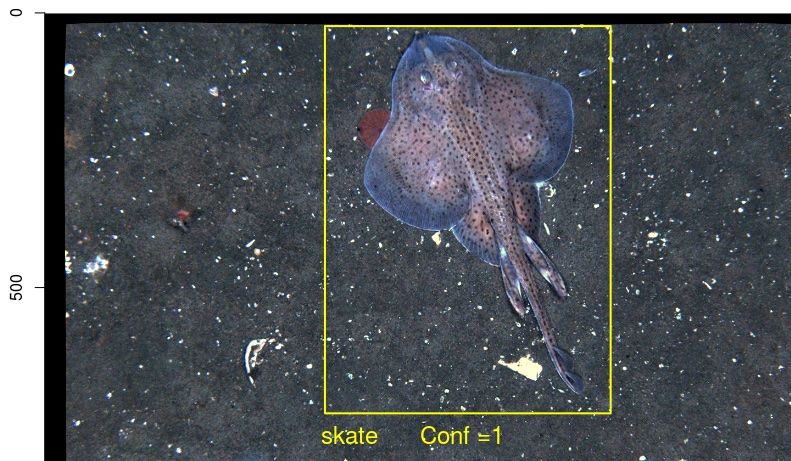

Hart notes that the system is not perfect. “It misses about 5 percent of the scallops, so we have to adjust the count, and we also have to adjust for how many shells it counts as live scallops.” The system grades its own performance by displaying a confidence level number next to each scallop it counts. “A number 1 confidence level is almost always a scallop,” says Hart. “But only a small percentage of what the machine counts at a 0.3 confidence level are true scallops.”

As noted above, Hart and the NEFSC team have never lacked data. Still, until they started using computer vision technology, they could only utilize a fraction of the information they collected. “We tow the Habcam V4 over most Georges Bank, the Channel, and the Mid-Atlantic,” says Hart. “It’s mounted on a frame with four strobes, side scan sonar, and altimetric and attitude sensors,” Hart notes that the camera and its protective cage are hardwired to a control center on the research vessel. “It’s towed with an armored cable that also has the fiber optic and power cable inside,” says Hart. “We have a control room set up on the boat where a pilot and a co-pilot can operate the camera and see what it is seeing in real-time.” The pilot and co-pilot sit in front of several screens, watching the camera imagery, side scan sonar, and a forward-looking camera. “The pilot controls the camera's depth and can raise it up when there’s an obstruction,” says Hart.

In addition to the Habcam V4 being towed by a vessel, the team uses an autonomous underwater drone equipped with the same two cameras and strobes. “It’s not as efficient as the towed camera,” says Hart. “It can only move at 1.5 knots. So, it can’t cover as much area as we can towing at 6 knots. But it can be a hundred miles away surveying areas of interest, and we can pick it up after four or five days and download the images. It’s also good for use in wind farms where we can’t get in to tow the Habcam V4.”

Using the computer vision program, the NEFSC can now annotate far more imagery than it could before, and so much faster. “We still don’t look at every image,” says Hart. “But where we used to look at 80 thousand, we now look at 800 thousand in much less time. The computer vision program works 24/7 and doesn’t take coffee breaks. It can annotate 10 thousand images an hour,” she says. “It can do in a day what we did in a month or more.”

Hart believes this will enable the NEFSC to provide the management council with more timely and accurate data and free up staff to do more in-depth research. “We can start to do habitat association with scallop abundance, for example,” she says, referring to analysis of bottom types preferred by scallops. “That’s been on our wish list.”

Another thing that has been on Hart’s wish list has been getting the information the NEFSC is generating to the public. “I’d love to have a public website where people could browse the images,” she says. “But there are security concerns, and we can’t do that.” At present, the councils are not getting the information either. “We are working on our adjustments, and then those will have to be peer-reviewed,” says Hart. Looking at the timeline for the following stock assessment in 2025 and further work on her models, Hart does not expect a peer review to take place until late 2025. This means that the councils will not have access to AI-generated data until 2026.

“But we’re doing a lot more with this than just counting scallops,” says Hart, noting that she can spend more time looking at what other information the VIAME images reveal. “The computer vision program is learning to see other species of interest, skates, whelks, crab, and hake, for example. Another interesting thing we’re seeing is a species called a snake eel that is far more abundant than we knew because they slip through the trawl surveys. We still don’t know what role they play in the ecosystem.”

NOAA Fisheries researchers are also using computer vision systems in other areas of the country. According to Teri Frady, NOAA is taking VHR satellite imagery and creating an operational system using artificial intelligence and cloud computing to identify North Atlantic right whales.

“In the Southeast, they are using computer vision to monitor coral reefs,” says Hart. “And on the West Coast, they are using it to count rockfish.”

As computer vision programs like VIAME become more refined and are peer-reviewed for use in stock assessments, the councils that manage fisheries can make decisions with greater confidence, and researchers will have time to increase their understanding of marine systems on a more holistic level.